What is Deduplication in Backup Solutions?

What is Deduplication?

What is Deduplication?

Deduplication, also called data deduplication or deduplication, is a process in information technology that identifies redundant data (duplicate detection) and eliminates duplicates before data is written to a non-volatile medium. The process is performed by deduplication backup software and compresses the amount of data sent from a sender to a receiver. It is almost impossible to predict the efficiency of using deduplication algorithms because it always depends on the data structure and the rate of change from backup to backup. Deduplication can be a very efficient way to reduce data volumes where pattern recognition is possible, such as block-based data.

Nowadays the primary application of deduplication is data backup, where in practice it is usually possible to achieve greater data compression than with other methods. In principle, the method is suitable for any application in which data is copied repeatedly and data is stored in a block-based pattern.

How Deduplication Works

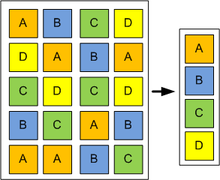

Deduplication systems divide the files into blocks of equal size (usually powers of two) and calculate a checksum for each block. This is also the distinction from single instance storage (SIS), which is designed to eliminate identical files.

All checksums are then stored together with a reference to the corresponding file and the position within the file. If a new file is added, its contents are also divided into blocks and the checksums are calculated from these. Afterwards it is compared whether a checksum already exists. This comparison of the checksums is much faster than comparing the file contents directly with each other. If an identical checksum is found, this is an indication that an identical data block may have been found, but it must still be checked whether the contents are actually identical, since it may also be a collision.

If an identical data block was found, one of the blocks is removed and only a reference to the other data block is stored instead. This reference requires less memory than the block itself.

There are two methods for selecting the blocks. With “reverse referencing” the first common block is stored, all other identical ones get a reference to the first one. The “forward referencing” always stores the last common data block that occurred and references the elements that occurred before. The choice of method depends on whether data should be stored faster or restored faster. Other approaches, such as “in-band” and “out-band”, compete over whether the data stream is analyzed “on the fly”, or only after it has been stored at the destination. In the first case, only one data stream may exist; in the second, the data can be analyzed in parallel using multiple data streams.

Example

When backing up hard disks to tape media, the ratio of new to unchanged data between two full backups is usually relatively small. However, two full backups still require at least twice the storage capacity on the tape compared to the original data. Deduplication recognizes the identical data components. Unique segments are recorded in a list for this purpose, and when this data part reappears, the time and location in the data stream are noted so that the original data can ultimately be restored. However, these are now no longer independent full backups, i.e. the loss of a file version leads to irretrievable data loss. Deduplication, thus, increases the risk of data loss in favor of lowering storage requirements, similar to incremental backups.

Deduplication and Chunking

The process works by chunking data in such a way that as many identical data blocks as possible are created that can be deduplicated. The process of splitting the source file into blocks is called chunking. The process for uniquely identifying blocks is called fingerprinting and can be performed, for example, by a cryptographic hash function.

The smaller the changes to a file can be determined, the less redundant backup is required. However, this increases the index size, i.e. the blueprint that describes how and from which components the file can be reassembled when it is restored. This tradeoff must be taken into account when selecting the block size for chunking.