Data Deduplication Considerations

We’re told deduplication combined with large Hyper-V virtual machines is supposed to lead to tremendous storage savings. However, there are some underlying assumptions and pros and cons to be considered because not all scenarios may turn out advantageous just because deduplication algorithms are deployed.

We’re told deduplication combined with large Hyper-V virtual machines is supposed to lead to tremendous storage savings. However, there are some underlying assumptions and pros and cons to be considered because not all scenarios may turn out advantageous just because deduplication algorithms are deployed.

Additional CPU processing: First of all, additional CPU work will be necessary when accessing (read and write) data; hence this may be an issue on system with limited CPU power.

Deduplication may lead to shorter processing times if a slow target is being used. For example, if your Hyper-V backup software writes to a slow NAS device or pushes files online via FTP backup or cloud backups. The much slower upload speed makes it worthwhile to apply deduplication, as most businesses have not connected yet to unlimited fiber optic internet.

Multistep or multistep restore or access may be necessary to access data. This is again interesting when you use slow storage media. Tape, for example, with its very long seek times would make deduplication unfeasible because each seek may take seconds rather than milliseconds on hard drives.

Hence, if frequent access is needed or fast restores are a must, target media needs to offer fast random access. At this time the cheapest storage media offering both enormous storage capacity as well as minuscule seek time for random access is the mechanic hard drive. By the way, Seagate now offers 8 TB drives.

Deduplication allows backups to be sent over slow, high latency links efficiently, when a small cache is available. High latency is similar to long seek times. While bursts of data may be high-speed, latency can cause major slowdowns. Deduplication on a local cache before upload may help minimize this phenomenon.

Interdependencies of backups and data blocks are another issue to consider and potentially increase the risk of data corruption. Since files are spread out over many blocks that depend and refer to one another, a bad block may now affect thousands of files instead of just one. Some deduplication systems track blocks live to skip full file scans. The risk with those kinds of systems is that if blocks are skipped or not detected for whatever reason, it can lead to major corruptions. In addition, such systems may need to slow down disk access in some system scenarios.

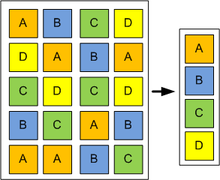

In-file deltas versus global deduplication: a file system offering deduplication may work well for backups and other stale data which is often read but infrequently written to and may be too complex and slow for real-time usage, due to the additional complexities involved in data storage and processing. Since storage costs are rapidly falling every year, it makes sense to utilize deduplication only for archiving and transmission purposes.

Because more processing is required when data is accessed and on-disk storage is much more complex due to interdependencies, deduplication is a tool that may or may not pay off, depending on the nature of your data and the performance of the hardware involved. Ideal scenarios for deduplication include VMware backups and Hyper-V backup because of the rather small rate of chance inside virtual machines. Stale files, such as video files that aren’t being edited, generally do not benefit from deduplication, unless they are replicated over many storage points.